Dynamic Dataflow Refresh For Dummies Using SQL Trigger In Power Automate

Level up your Power BI data refresh

Standard refresh settings in Power BI are about as flexible as I am after three decades of hockey—which is to say, not very.

With Power BI Pro, you can only configure the refresh to start at fixed hours, either at the beginning of the hour or halfway through. Obviously, this doesn’t take into account if your data source is actually up to date. Fortunately, there is a zero-code way in Power Automate to dynamically trigger the refresh of your Dataflows—and Semantic models—after your data source is reloaded.

This method comes with three main benefits:

Bulletproof sequencing — Never start the refresh too early or late.

Reduce refresh time — Remove all gaps from the process.

Custom notifications — Get notified about refresh success (or failure).

Here are the three steps you need to take to set it up for yourself.

Step #1: Configure The SQL Trigger

In this example the data source is a SQL database.

If you’re anything like me and this area is not your strongest point, you might need some help from IT 🤓 to get this set up. The SQL trigger I’m using to start the flow in Power Automate is called When an item is created (V2). This trigger requires an IDENTITY column in the table you’re using to work properly (you might want to read this article that speaks about some caveats).

Also, note that this is a premium trigger so you might need to check your Power Automate licensing.

The configuration of the trigger is rather straightforward.

First, set up a connection to the SQL Server and Database you want to use.

Second, select the Table you want to use from the Table Name dropdown. As far as I understand, you will only see tables with correctly set up IDENTITY column in this menu.

Third, configure optional parameters such as Filter Query. The syntax might be bit strange to but take a look at the above picture to get an idea how you can do it.

Now you should be ready to move on to the actual data refresh actions.

Step #2: Refresh Dataflows

I’m a big fan of using Dataflows (see this article for details).

That is why we will start by refreshing all Dataflows which are then later loaded to the Semantic model. Good news is that Power Automate has an action for this called—surprise, surprise—Refresh a dataflow.

Simply select the Workspace and the Dataflow you want to refresh from the dropdown menus and you’re good to go. Also, remember to rename the action for clearly indicate which dataflow you’re refreshing.

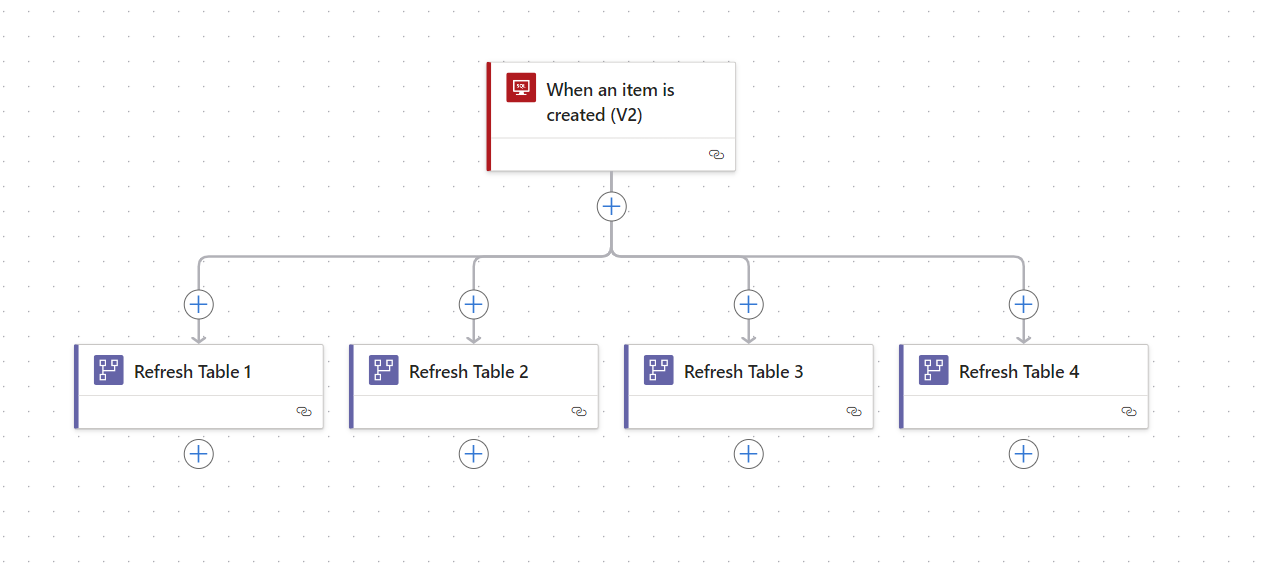

Because in my setup each Dataflows contains only one table, I need to refresh multiple Dataflows. The easiest way to make this happen is to copy the Refresh action and paste it as a parallel action which happens by right clicking on the plus symbol above the action.

Why parallel?

The reason is that when you refresh all Dataflow tables parallel, it will save you some time. It’s not uncommon at all that one of my fact tables takes 30 minutes to refresh. Put few of those in sequence and the flow would run for hours. No thanks.

Of course, please check that this method doesn’t choke 🪦 your data source. For me, it doesn’t.

The next phase is to capture when all of these Dataflows are finished refreshing.

For that, add a Trigger called When a dataflow refresh completes after each of the actions. You find it by changing the Action type to Triggers in the “Add an action” menu and searching for “Dataflow”.

After configuring the first Trigger, you can copy paste it below each refresh action (not as parallel). Just remember to make sure to reference the same Dataflow as in the above refresh action.

By default, the trigger will check every 3 minutes if the dataflow is refreshed but you can change this setting if you like.

This is how it should look after this step is done.

Step #3: Refresh Semantic Model

Final step is to refresh your data model & get notified.

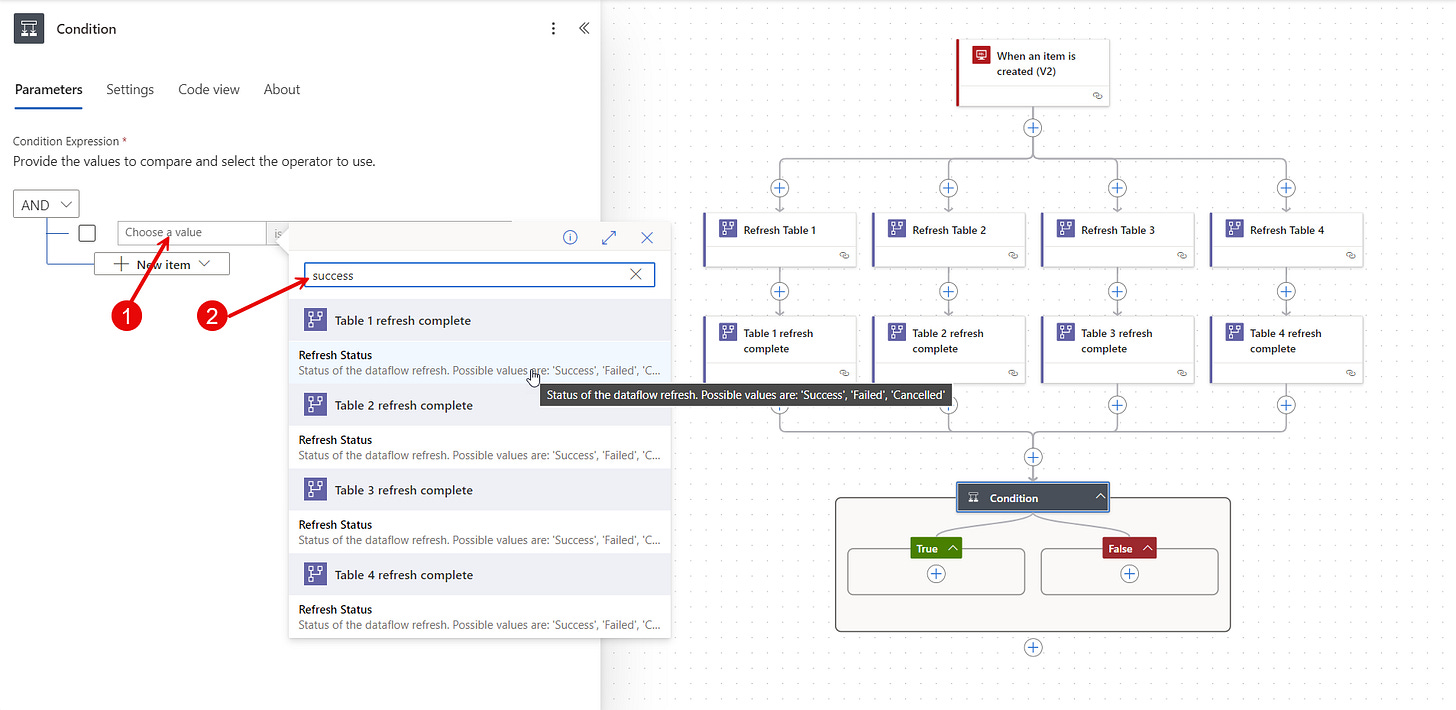

The catch here is to start the data model refresh only after all dataflows have completed refreshing. Start by adding a Condition action under one of the “refresh completed” actions but adjust the Run after settings.

Follow the below picture to see where to click around.

After all branches are connected with the Condition action, switch to the Parameter tab.

Add dynamic content into the AND rule either by clicking on the flash icon or typing “/” in the Choose a value text box. Then search for “success” which will give you access to the Refresh Status dynamic content (output of this dynamic content is always either “Success”, “Failed”, or “Cancelled”).

Repeat this step for all Dataflows, four times in my case.

Then decide the logic how you want this condition to work.

This might depend on your specific use case, but in this example, I want to refresh the semantic model even if one of the Dataflows has failed refreshing. Accordingly, my rule says: “is not equal” to “Cancelled”. What this basically means is that the condition rule will be true when the Refresh Status of all Dataflows is either Success or Failed.

Exactly what I want.

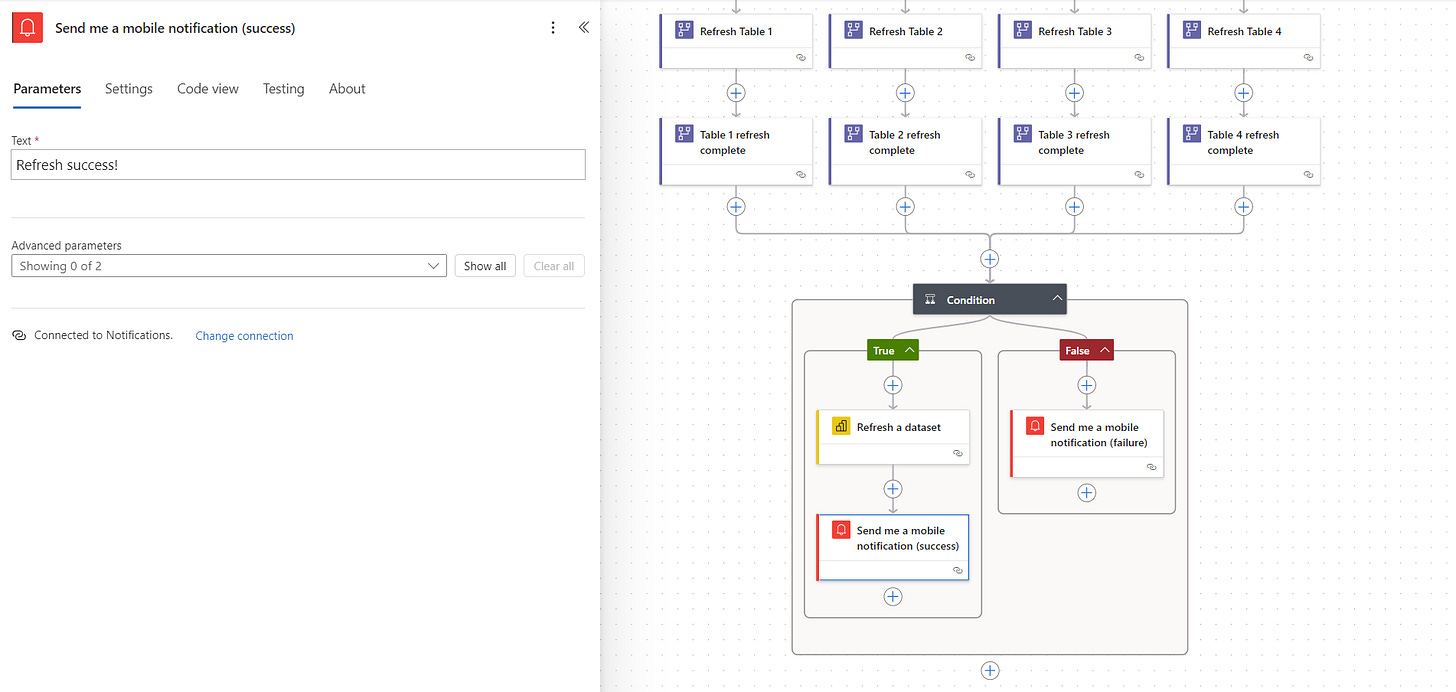

The only thing left is to choose which semantic model to refresh.

This is really simply to configure with the Refresh a dataset action. You might also want to set up a custom notification about the flow result. I prefer the Send me a mobile notification action over email since firing up that distracting inbox is that last thing I want to do when starting my workday. And besides, why not receive push notification each morning reminding that the stuff you’ve built works like a charm!👌

Just make sure you have the Power Automate App installed on your phone.

Psst... We’ll soon be on the hunt for a new data hero at Bufab! If you’re awesome—or know someone who is—drop me a DM.